Speech Data Annotation: Speech Recognition Technology in Self-Driving Cars

By Anolytics | 02 August, 2023 in Self Driving | 4 mins read

By Anolytics | 02 August, 2023 in Self Driving | 4 mins read

Speech recognition technology plays a crucial role in the development of self-driving cars, enabling passengers to interact with the vehicle through voice commands. This advanced feature enhances the overall driving experience by providing a convenient and hands-free way to control various aspects of the vehicle’s functionality.

Using sophisticated algorithms and machine learning techniques, the car’s onboard computer system can accurately recognize and interpret spoken commands from passengers. This technology allows passengers to perform a wide range of functions without manual input, such as adjusting temperature settings, changing music or radio stations, navigating to specific destinations, making phone calls, and more.

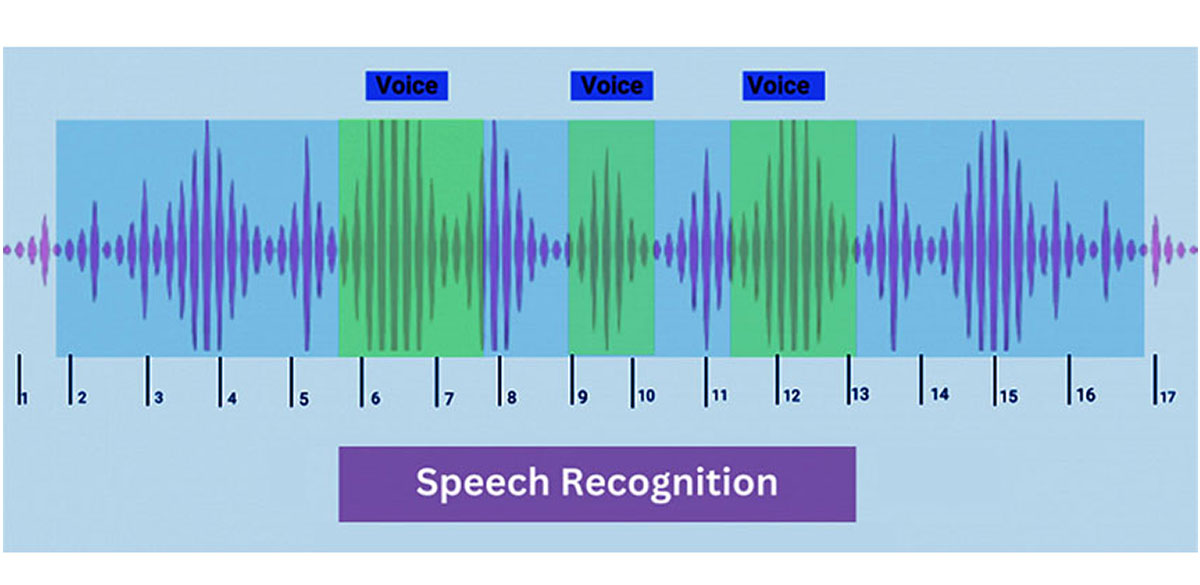

The speech recognition system in self-driving cars relies on natural language processing to understand and process the spoken commands effectively. The system analyzes the audio input, converts it into digital data, and then matches it with pre-defined commands or patterns to determine the intended action.

Benefits of Self-driving Vehicles

Self-driving cars can benefit from speech recognition technology in several ways. Among the advantages are:

Interaction with self-driving cars without manual input or physical contact: Speech recognition technology allows passengers to interact with self-driving cars without having to touch the controls. By providing hands-free operation, passengers can remain engaged in other activities while being able to control various vehicle functions while keeping their hands on the wheel.

Speech recognition contributes to better road safety by reducing manual controls and distractions. When using voice commands, passengers can adjust settings and make phone calls while maintaining focus on the driving environment.

A natural and intuitive interface is provided by voice commands, which provide a natural and intuitive method of communication with a self-driving vehicle. By speaking commands in a conversational manner, passengers of all ages and technical backgrounds will be able to interact with the system in a manner that replicates a human-to-human interaction.

Speech recognition technology facilitates interaction with traditional physical controls for passengers with disabilities or mobility challenges. A broader range of people will be able to use self-driving cars with voice commands.

Controlling various aspects of the vehicle is more efficient and convenient with voice commands. The voice interaction feature increases passenger convenience by reducing manual effort and time spent adjusting settings, requesting actions, and entering destinations.

Passengers can interact with cars’ systems while still focusing on the environment while using speech recognition. With offloaded cognitive load, passengers can make better decisions while on the road by maintaining better situational awareness.

A speech recognition system can be tailored to suit the needs of each passenger. As the self-driving car learns and adapts to individual speech patterns, preferences, and language nuances, the interface can become more personalized and customized.

Speech recognition technology enables self-driving cars to be more usable, safer, and accessible. In addition to providing a hands-free and intuitive driving experience, this system ensures that passengers remain focused on the road by allowing them to interact hands-free and intuitively.

Challenges of Self-driving Vehicles

However, it is worth noting that speech recognition systems in self-driving cars face challenges such as accurately understanding different accents, dealing with background noise, and accurately interpreting complex or ambiguous commands. Ongoing advancements in machine learning, natural language processing, and audio annotation services are continually improving the accuracy and robustness of speech recognition technology in self-driving cars.

Self-driving cars use speech recognition systems to understand and interpret voice commands, and speech data annotation plays a crucial role in this process. Using labeled data, machine learning models can be trained on speech recognition by tagging and labeling speech data.

The process of speech data annotation typically involves the following steps to ensure self-driving cars understand voice commands effectively:

Audio Data Collection:

The self-driving car gathers a massive amount of audio data, including recordings of various spoken commands from users. In order to ensure that the system’s robustness, these recordings include accents, dialects, and speech patterns that span a wide range.

Audio Data Transcription Tasks:

Annotators convert the spoken words into written text by transcribing the audio recordings. As part of this process, the spoken words are accurately captured, along with any specific instructions, commands, or prompts that may have been given. Audio Data Transcription is a crucial step as it directly impacts the output.

Intent and Entities Annotation:

In addition to transcribing the speech, annotators further annotate the text by noting the intended purpose for each command, in addition to tagging any relevant entities mentioned in the speech which may be related to the intent behind the command. If the intent of a command is to set the temperature, the entity would be the specific temperature value mentioned in the command, and the intent would be the setting of the temperature.

Quality Assurance:

The annotated data is reviewed and validated as part of the quality assurance process. It is imperative that the annotation process be conducted in an accurate and consistent manner in order to correct any errors or discrepancies that may have occurred during the annotation process.

Iterative Refinement:

Iterative training processes are carried out in response to the availability of more annotated data. A series of iterations will be conducted in which annotated data will be fed into machine learning models, parameters will be tuned, and the performance of the models will be evaluated. Models are trained to recognize and understand voice commands more accurately based on the annotated data.

Constant Improvement:

In addition to scenario and command variations, user-based annotations are an ongoing process. It is important to continually collect and annotate new data to enhance the system’s capabilities, and also to make it more robust, accurate, and able to adapt to a wider range of voice commands and interactions from users.

In order to annotate speech data effectively, skilled annotators must possess a deep understanding of natural language and speech recognition. A dataset with annotated commands can be used to train the machine learning models that drive self-driving cars for speech recognition, enabling passengers to give voice commands effectively and improving the overall driving experience.

Wrapping it up!

Speech recognition technology is a vital component of self-driving cars, enabling passengers to interact with the vehicle using voice commands. It enhances safety, convenience, and accessibility, transforming the way passengers engage with the vehicle’s functionalities while on the road.

please contact our expert.

Talk to an Expert →

You might be interested

- Self Driving 30 Jan, 2020

How to Improve Computer Vision in Autonomous Vehicles using Image Annotation Services?

Self-driving cars need more precise visual training to detect or recognize the objects on the street and ride in the rig

Read More →

- Self Driving 12 Aug, 2020

Best Challenges with Autonomous Vehicles: The Self-Driving Car Problem

Autonomous vehicles, especially self-driving passenger cars are like a dream when it will become come true. Yes, I’m t

Read More →

- Self Driving 17 Sep, 2021

The Safety Framework in Autonomous Cars

Ever wondered how the vehicular safety framework has been defined in autonomous vehicles?Till now we have heard of the A

Read More →